Choosing good parallelization schemes

The efficient usage of Fleur on modern (super)computers is ensured by a hybrid MPI/OpenMP parallelization. The -point loop and the eigenvector problem are parallelized via MPI (Message Passing Interface). In addition to that, every MPI process can be executed on several computer cores with shared memory, using either the OpenMP (Open Multi-Processing) interface or multi-threaded libraries.

In the following the different parallelization schemes are discussed in detail and the resulting parallelization scaling is sketched for several example systems.

MPI parallelization

- The -point parallelization gives us increased speed when making calculations with large -point sets.

- The eigenvector parallelization gives us an additional speed up but also allows to tackle larger systems by reducing the amount of memory each MPI process uses.

Depending on the specific architecture, one or the other or both levels of MPI parallelization can be used.

-point parallelization

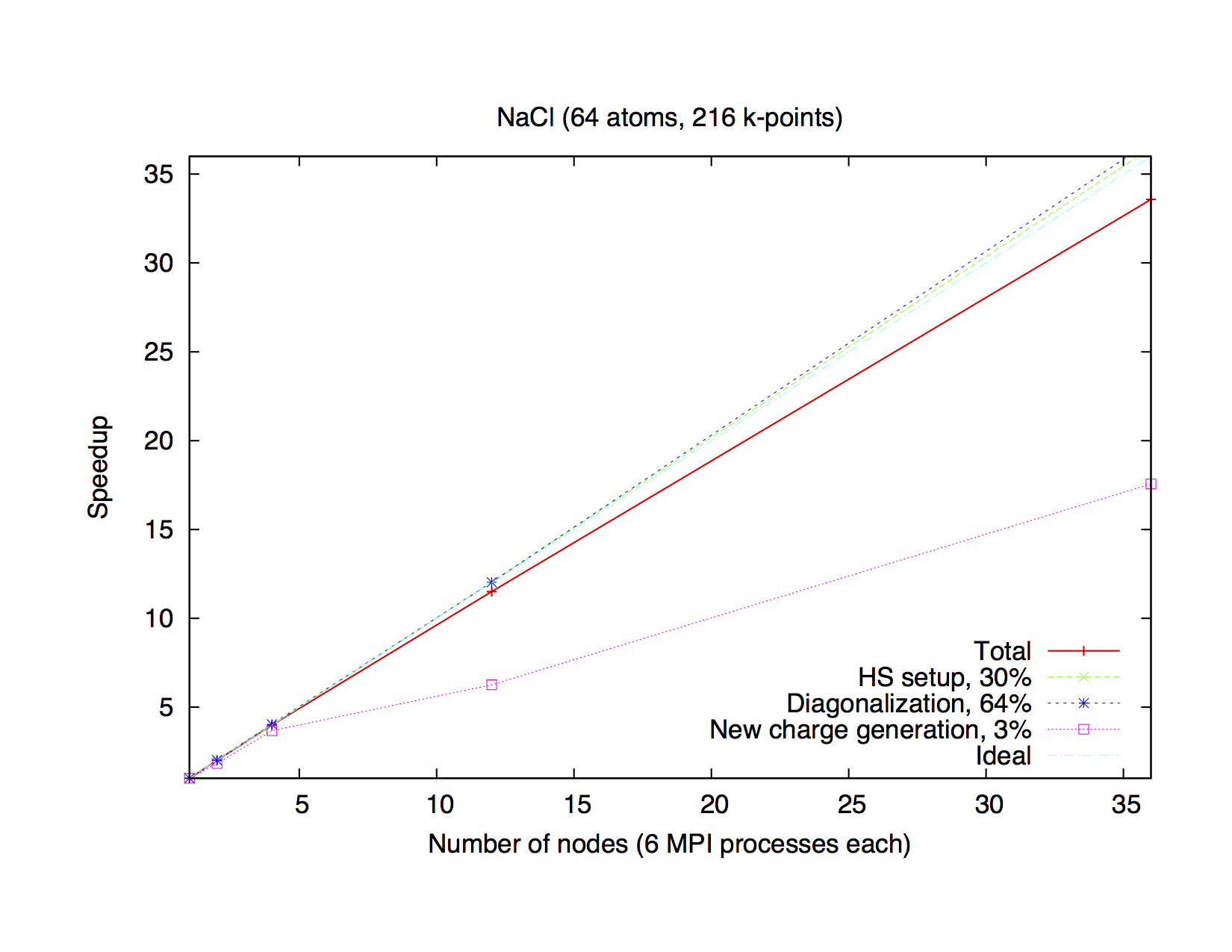

This type of parallelization is always chosen if the number of -points (K) is a multiple of the number of MPI processes (P). If is not an integer, a mixed parallelization will be attempted and M MPI processes will work on a single k-point, so that is an integer. This type of parallelization can be very efficient because the three most time-consuming parts of the code (Hamiltonian matrix setup, diagonalization and generation of the new charge density) of different points are independent of each other and there is no need to communicate during the calculation. Therefore this type of parallelization is very beneficial, even if the communication between the nodes/processors is slow. The drawback of this type of parallelization is that the whole matrix must fit in the memory available for one MPI process, i.e., on each MPI process sufficient memory to solve a single eigenvalue-problem for a single point is required. The scaling is good as long as many points are calculated and the potential generation does not become a bottleneck. In the following figure the saturation of the memory bandwidth might cause the deviation of the speedup from the ideal.

Typical speedup of the k-point parallelization for a small system (NaCl, 64 atoms, 216 k-points) on a computer cluster (Intel E5-2650V4, 2.2 GHz). Execution time of one iteration is 3 hours 53 minutes.

Eigenvector Parallelization

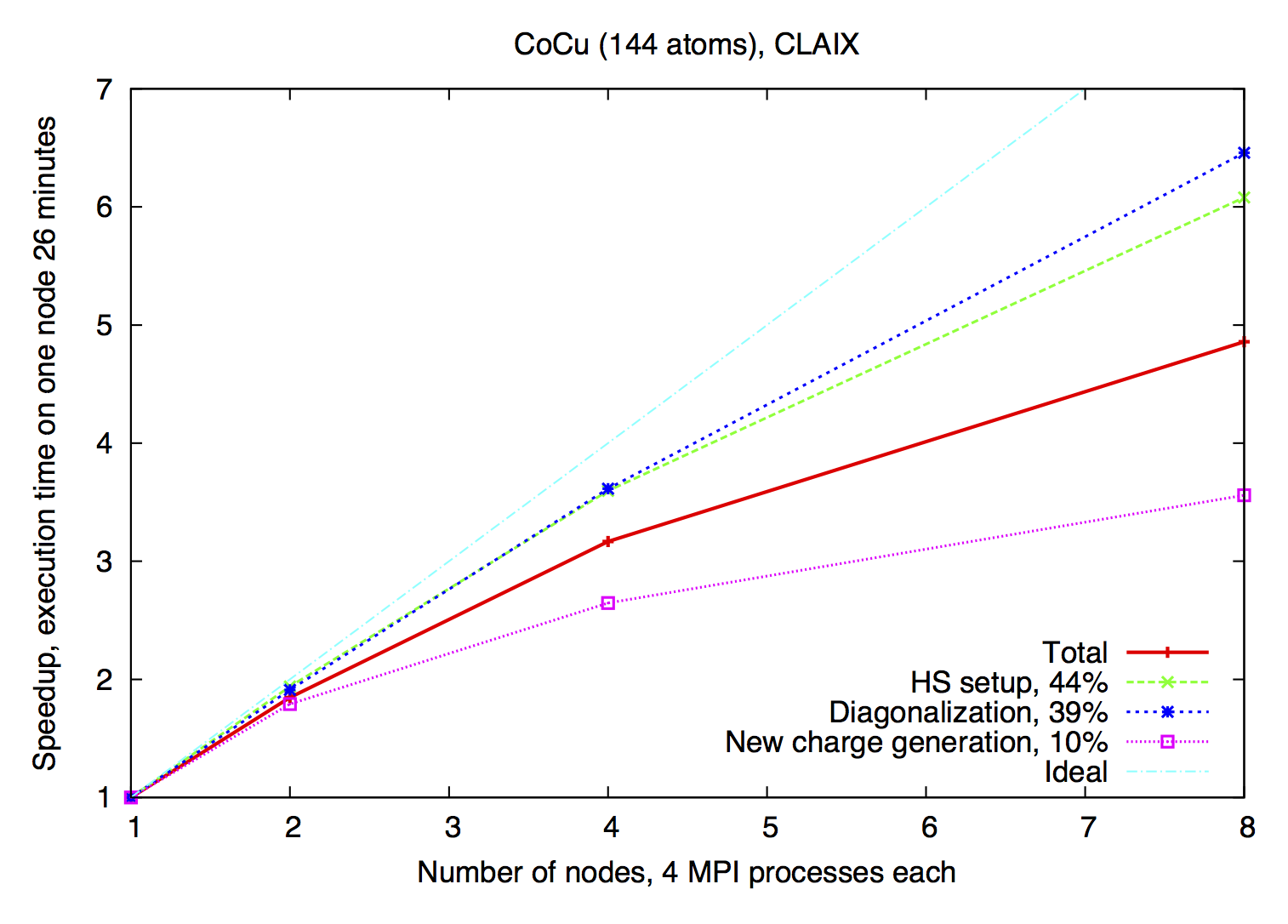

If the number of points is not a multiple of the number of MPI processes, every point will be parallelized over several MPI processes. It might be necessary to use this type of parallelization to reduce the memory usage per MPI process, i.e. if the eigenvalue-problem is too large. This type of parallelization depends on external libraries which can solve eigenproblems on parallel architectures. The Fleur code contains interfaces to ScaLAPACK, ELPA and Elemental. Furthermore, for a reduction of the memory footprint it is also possible to use the HDF5 library to store eigenvector data for each point on the disc. However, this implies a performance drawback.

Example of eigenvector parallelization of a calculation with 144 atoms on the CLAIX (Intel E5-2650V4, 2.2 GHz).

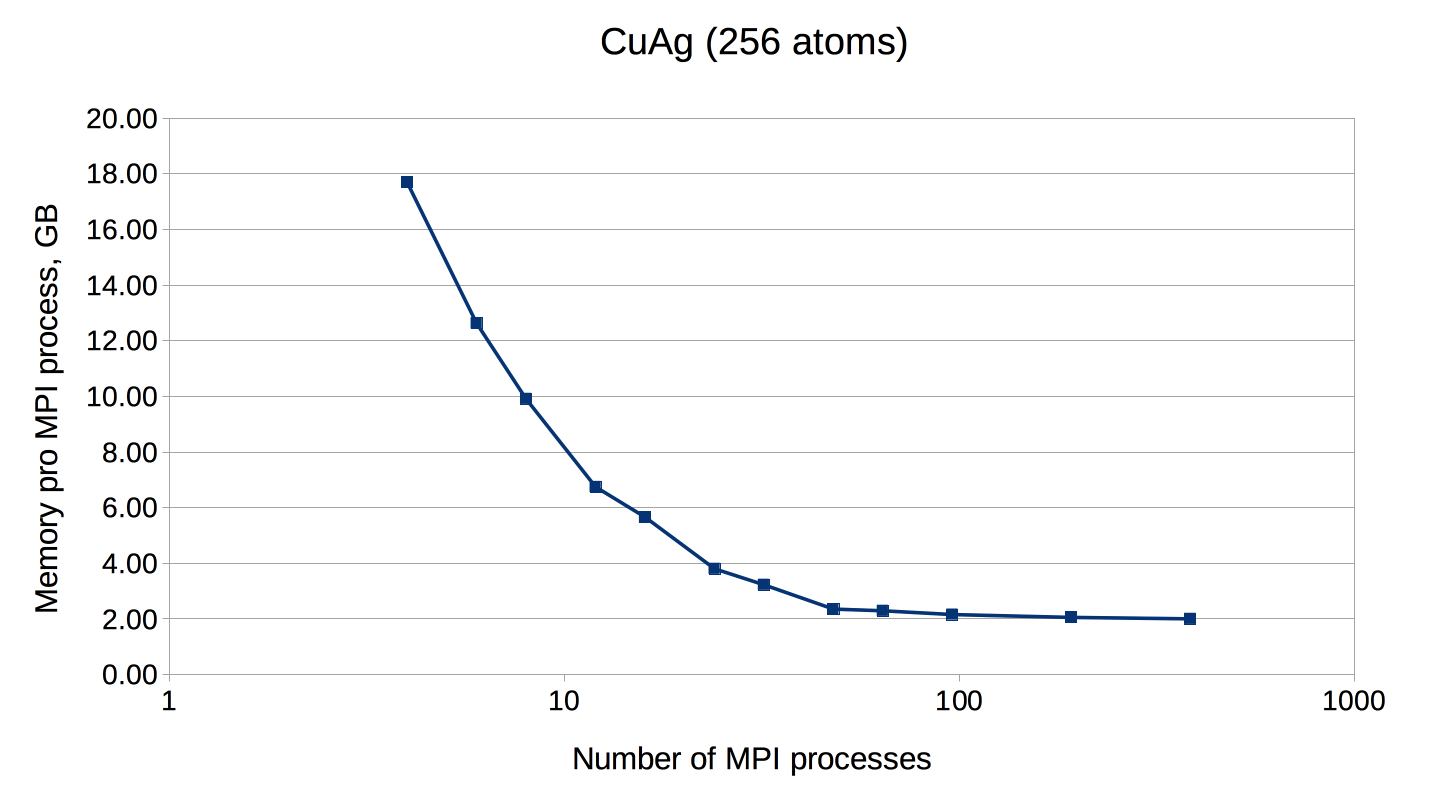

An example of FLEUR memory requirements depending on the amount of MPI ranks. Test system: CuAg (256 atoms, 1 point). Memory usage was measured on the CLAIX supercomputer (Intel E5-2650V4, 2.2 GHz, 128 GB per node).

OpenMP parallelization

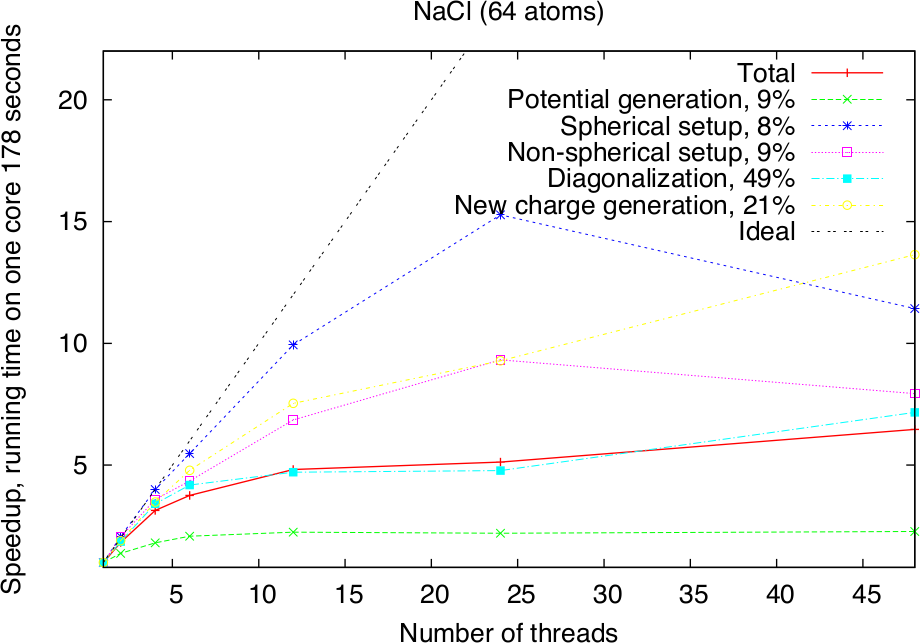

Modern HPC systems are usually cluster systems, i.e., they consist of shared-memory computer nodes connected through a communication network. It is possible to use the distributed-memory paradigm (MPI parallelization) also inside the node, but in this case the memory available for every MPI process will be considerably smaller. Imagine to use a node with 24 cores and 120 GB memory. Starting a single MPI process will make all 120 GB available to this process, two will only get 60 GB each and so on, if 24 MPI processes are used, only 5 GB of memory will be available for each of them. The intra-node parallelism can be utilized more efficiently when using the shared-memory programming paradigm, for example through the OpenMP interface. In the Fleur code the hybrid MPI/OpenMP parallelization is realised by directly implementing OpenMP pragmas and the usage of multi-threaded libraries. Note that in the examples above OpenMP parallelization was used together with the MPI parallelization. To strongly benefit from this type of parallelization, it is crucial that Fleur is linked to efficient multithreaded software libraries. A good choice is the ELPA library and the multithreaded MKL library. The following figure shows the pure OpenMP parallelization scaling on a single compute node.

Pure OpenMP parallelization scaling for NaCl (64 atoms) on a single node (Intel Skylake Platinum 8160, 2x24 cores).

Parallel execution: best practices

Since there are several levels of parallelization available in Fleur: -point MPI parallelization, eigenvalue MPI parallelization, and multi-threaded parallelization, it is not always an easy decision how to use the available HPC resources in the most effective way: How many nodes are needed, how many MPI processes per node, how many threads per MPI process. A good choice for the parallelization is based on several considerations.

First of all, you need to estimate a minimum amount of nodes. This depends strongly on the size of the unit cell and the memory size of the node. In the table below some numbers for a commodity Intel cluster with 120 GB and 24 cores per node can be found - for comparable unit cells (and hardware) these numbers can be a good starting point for choosing a good parallelization. The two numbers in the "#nodes" column show the range from the "minimum needed" to the "still reasonable" choice. Note that these test calculations only use a single point. If a simulation crashes with a run-out-of-memory-message, one should try to double the requested resources. The recommended number of MPI processes per node can be found in the next column. If you increasing #k-points or creating a super-cell and you have a simulation which for sure works, you can use that information. For example, if you double #k-points, just double #nodes. If you are making a supercell which contains N times more atomes, than your matrix will be N-square times bigger and require about N-square times more memory.

Best values for some test cases. Hardware: Intel Broadwell, 24 cores per node, 120 GB memory.

| Name | # k-points | real/complex | # atoms | Matrix size | LOs | # nodes | # MPI per node |

|---|---|---|---|---|---|---|---|

| NaCl | 1 | c | 64 | 6217 | - | 1 | 4 |

| AuAg | 1 | c | 108 | 15468 | - | 1 | 4 |

| CuAg | 1 | c | 256 | 23724 | - | 1 - 8 | 4 |

| Si | 1 | r | 512 | 55632 | - | 2 - 16 | 4 |

| GaAs | 1 | c | 512 | 60391 | + | 8 - 32 | 2 |

| TiO2 | 1 | c | 1078 | 101858 | + | 16 - 128 | 2 |

The next question, how many MPI processes? The whole amount of MPI parocesses is #MPI per node times #nodes. If the calculation involves several points, the number of MPI processes should be chosen accordingly. If the number of points (K) is a multiple of the number of MPI processes (P) then every MPI procces will work on a given point alone. If is not an integer, a mixed parallelization will be attempted and M MPI processes will work on a single point, so that is an integer. This means for example that if the calculation uses 48 points, it is not a good idea to start 47 MPI processes.

As for the number of OpenMP threads, on the Intel architectures it is usually a good idea to fill the node with threads (i.e. if the node consist of 24 cores and 4 MPI processes are used, each MPI process should spawn 6 threads), but not to use the hyper-threading.